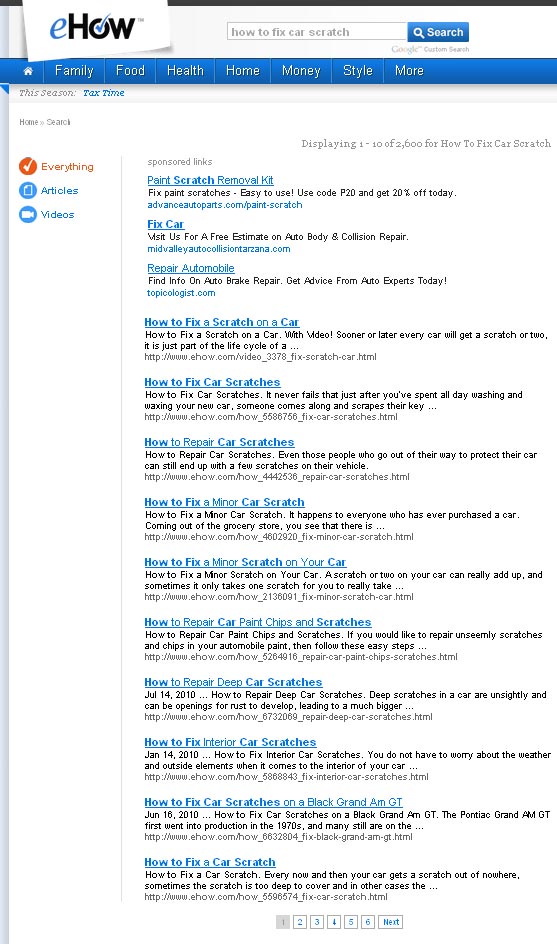

Google released the Penguin update a couple weeks ago, in an effort to rid its search engine results of webspam. The update targeted the kinds of things Google has always tried to rid its results of, but the update is supposed to make Google algorithmically better at it. That, combined with the ever-refreshing Panda update, could go a long way to keep Google’s results closer to spam-free than in previous years.

Meanwhile, Google continues to secure related patents. Bill Slawski is always on top of the patents in the search industry, and recently pointed out some that may have a direct role in how Google handles Webspam. Today, Google was granted another, as Slawski points out. As usual, he does a wonderful job of making sense out of the patent.

While it appears pretty complex, and there is more to it, part of it is about how Google can disassociate spam form legitimate content, which at its most basic level, is the point of the Penguin update.

It’s called Content Entity Management . Here’s the abstract:

A first content entity and one or more associated second content entities are presented to one or more arbiters. Arbiter determinations relating to the association of at least one of the second content entities with the first content entity are received. A determination as to whether the at least one of the second content entities is to be disassociated from the first content entity based on the arbiter determinations can be made.

“It makes sense for Google to have some kind of interface that could be used to both algorithmically identify webspam and allow human beings to take actions such as disassociating some kinds of content with others,” explains Slawski. “This patent presents a framework for such a system, but I expect that whatever system Google is using at this point is probably more sophisticated than what the patent describes.”

The patent was filed for as far back as March, 2007.

To the point about human beings, which as Slawski acknowledges, could be Google’s human raters (and/or others on Google’s team), there is a part in the patent that says:

In one example implementation, arbiters can also provide a rationale for disassociation. The rationale can, for example, be predefined, e.g., check boxes for categories such as “Obscene,” “Unrelated,” “Spam,” “Unintelligible,” etc. Alternatively, the rationale can be subjective, e.g., a text field can be provided which an arbiter can provide reasons for an arbiter determination. The rationale can, for example, be reviewed by administrators for acceptance of a determination, or to tune arbiter agents, etc. In another implementation, the rational provided by the two or more arbiters must also match, or be. substantially similar, before the second content entity 110 is disassociated from the first content entity 108. Emphasis added.

The actual background described in the filing talks a little about spam:

A first content entity, e.g., a video and/or audio file, a web page for a particular subject or subject environment, a search query, a news article, etc., can have one or more associated second content entities, e.g., user ratings, reviews, tags, links to other web pages, a collection of search results based on a search query, links to file downloads, etc. The second content entities can, for example, be associated with the first content entity by a user input or by a relevance determination. For example, a user may associate a review with a video file on a web site, or a search engine may identify search results based on a search query.

Frequently, however, the second content entities associated with the first content entity may not be relevant to the first content entity, and/or may be inappropriate, and/or may otherwise not be properly associated with the first content entity. For example, instead of providing a review of a product or video, users may include links to spam sites in the review text, or may include profanity, and/or other irrelevant or inappropriate content. Likewise, users can, for example, manipulate results of search engines or serving engines by artificially weighting a second content entity to influence the ranking of the second content entity. Fox example, the rank of a web page may be manipulated by creating multiple pages that link to the page using a common anchor text.

Another part of the lengthy patent document mentions spam in relation to scoring:

In another implementation, the content management engine 202 can, for example, search for one or more specific formats in the second content entities 110. For example, the specific formats may indicate a higher probability of a spam annotation. For example, the content management engine 202 can search for predetermined uniform resource locators (URLs) in the second content entities 110. If the content management engine 202 identifies a predetermined URL a second content entities 110, the content management engine 202 can assign a low association score to the one second content entities 110.

Another part, discussing comments, also talks about spam detection:

In another implementation, a series of questions can be presented to an arbiter, e.g., “Is the comment interesting?,” “Is the comment offensive?,” “Does this comment appear to be a spam link?” etc. Based on the arbiter answers, the content management engine 102 or the content management engine 202 can, for example, determine whether one or more second content entities are to be disassociated with a first content entity item.

The document is over 10,000 words of patent speak, so if your’e feeling up to that, by all means, give it a look. It’s always interesting to see the systems Google has patented, though it’s important to keep in mind that these aren’t necessarily being used in the way they’re described. Given the amount of time it takes for a company to be granted a patent, there’s always a high probability that the company has moved on to a completely different process, or at least a much-evolved version. And of course, various systems can work in conjunction with one another. It’s not as if any one patent is going to provide a full picture of what’s really going on behind the scenes.

Still, there can be clues within such documents that can help us to understand some of the things Google is looking at, and possibly implementing.

Image: Batman: The Animated Series

![Google Webspam Update: Where’s The Viagra? [Updated]](https://dev.webpronews.com/wp-content/uploads/2023/02/System76-Pangolin-Credit-System76-80x75.jpg)

"As we’ve increased both our size and freshness in recent months, we’ve naturally indexed a lot of good content and some spam as well," explains Cutts. "To respond to that challenge, we recently launched a redesigned document-level classifier that makes it harder for spammy on-page content to rank highly. The new classifier is better at detecting spam on individual web pages, e.g., repeated spammy words—the sort of phrases you tend to see in junky, automated, self-promoting blog comments. We’ve also radically improved our ability to detect hacked sites, which were a major source of spam in 2010. And we’re evaluating multiple changes that should help drive spam levels even lower, including one change that primarily affects sites that copy others’ content and sites with low levels of original content. We’ll continue to explore ways to reduce spam, including new ways for users to give more explicit feedback about spammy and low-quality sites."

"As we’ve increased both our size and freshness in recent months, we’ve naturally indexed a lot of good content and some spam as well," explains Cutts. "To respond to that challenge, we recently launched a redesigned document-level classifier that makes it harder for spammy on-page content to rank highly. The new classifier is better at detecting spam on individual web pages, e.g., repeated spammy words—the sort of phrases you tend to see in junky, automated, self-promoting blog comments. We’ve also radically improved our ability to detect hacked sites, which were a major source of spam in 2010. And we’re evaluating multiple changes that should help drive spam levels even lower, including one change that primarily affects sites that copy others’ content and sites with low levels of original content. We’ll continue to explore ways to reduce spam, including new ways for users to give more explicit feedback about spammy and low-quality sites."