If your website consistently loads in under two seconds, congratulations! It is in the small minority of sites able to meet the new threshold for patience among Internet users. But if yours is like the vast majority of websites that take longer than two seconds to load — even for users with a decent browser and a fast connection — you might want to keep reading.

The sad truth is, after three or four seconds, nearly half of your website’s visitors may be gone, having bounced away in frustration. And for those users who do suffer through a slow page load, the slowness will negatively impact their satisfaction and their engagement with your site. Delays as small as a hundred milliseconds have been shown to decrease the amount of time users spend on a site, reduce conversion rates and reduce average order size. Slow pages also rank lower in searches, are less likely to get indexed, and are less likely to be recommended via word of mouth. In every conceivable way, when it comes to your goals for your website, slowness is the enemy.

Where does the slowness come from? Perhaps surprisingly, over 90% of the time users spend waiting for a given page to load typically occurs after the main HTML page has been retrieved from the server! So for a huge number of slow sites, the single biggest culprit is bloat and inefficiency in the page. Specifically, the main offender is often too many HTTP requests triggered by the page. Each stylesheet, script and image found in a typical HTML page requires a separate round trip from the browser, to request and receive the resource from a web server. The latency inherent in these HTTP requests delays the display of the page and users’ ability to interact with it. The overhead of these round trips can be massive, dwarfing the time it took to obtain the HTML document itself. So one effective rule of thumb for making your pages faster is to find ways to reduce the number of HTTP requests it requires. This principle is the basis for many of the front-end optimization techniques recommended by web performance experts. Below are a few such techniques for reducing HTTP requests.

A quick disclaimer about the following recommendations: web performance optimization is a complex and evolving discipline, and brief articles like this one require glossing over some subtleties, details and trade-offs. So, please consider these points as an introduction and a starting point, rather than a definitive or prescriptive solution.

1. Stylesheets

Combine them:

Simply “concatenate,” or combine, multiple stylesheet files into one. If you have four .css files, for instance, instead of referencing them individually:

<link rel=”stylesheet” type=”text/css” media=”all” href=”/css/one.css”>

/** contains: css rules in one.css **/

<link rel=”stylesheet” type=”text/css” media=”all” href=”/css/two.css”>

/** contains: css rules in two.css **/

<link rel=”stylesheet” type=”text/css” media=”all” href=”/css/three.css”>

/** contains: css rules in three.css **/

<link rel=”stylesheet” type=”text/css” media=”all” href=”/css/four.css”>

/** contains: css rules in four.css **/

combine their contents into a single .css file like this:

<link rel=”stylesheet” type=”text/css” media=”all” href=”/css/concat-v2.css”>

/** css rules in one.css **/

/** css rules in two.css **/

/** css rules in three.css **/

/** css rules in four.css **/

… so the content of each of these four files is included in the same order in the combined file. The same styling will apply to your page, but it cost a fraction of the HTTP requests to fetch it.

Put CSS in the head:

Also, be sure to put CSS in the HTML document’s <head> where it belongs, to speed up rendering. Inline <style> tags can delay rendering and force the browser to re-paint the whole page, which is costly from a performance perspective. Minification and compression are highly recommended, to reduce file size. Making your stylesheets cacheable will also greatly speed up subsequent page. (I’ll discuss caching and related topics in a future article in this series.)

2. Scripts

Combine them:

Just like with stylesheets, concatenating JavaScript files is a good way to reduce the number of HTTP requests in your page. If you have multiple scripts, combining them into a single script that is minified, compressed and is recommended.

Note there are tradeoffs with concatenation, for both CSS and JS. For example, you may want to discriminate between styles and scripts needed in every page of your site versus those unique to a given page or section of your site. You may also want to prioritize early loading for certain scripts, while others are fine to delay until everything else is done. And you may choose to reference certain popular libraries like jQuery on a commonly used public CDN URL in an attempt to leverage the browser’s cache from other sites the user has visited. So there are valid reasons not to combine every single .css or .js file in every page. But in general, given a choice between loading a bunch of smaller files separately and loading a single larger combined file, the latter is usually the better choice for performance.

Put scripts at the bottom:

Scripts should be loaded at the very bottom of your HTML document before the closing </body> tag. The old-school practice of putting them in the <head> introduces severe performance problems, in part because scripts are “blocking” resources. In other words, when the browser encounters a script tag, it generally stops doing much of anything else at all until it has fetched and executed the script. So putting scripts at the end of the document and/or fetching them asynchronously is important for performance. There are different ways to account for dependencies of the page on external scripts or interdependencies among scripts, and to preserve their order of execution in a cross-browser compatible way. For complex scripting and client-heavy web applications, using an open source script loader can be a good solution. But the simplest approach is usually just to put them at the bottom, in the desired execution order. [To learn more about blocking scenarios and script loading approaches, I highly recommend the excellent Steve Souders book “Even Faster Web Sites”.]

3. Images

Having too many images on a webpage is a very common performance problem that’s nearly as old as the <img> tag. Fortunately, there are optimization techniques which allow you to implement your chosen design with fewer HTTP requests. This can be achieved by combining image files with CSS sprites, by in-lining them with Data URIs, or by using pure CSS to eliminate the use of an image file altogether. Read on for details.

Combining with Sprites:

Combining images with “CSS spriting” has become a mainstream optimization technique. The idea is to combine a number of common images into a single, larger image file—and thus a single request. This typically includes images like navigation elements, buttons, logos, icons or any other static images which are central to a design and which rarely change. When the big master image containing the smaller ones in it is fetched, CSS is then used to precisely position specific parts of the same image in the right places in the page while hiding the other parts from view. The result is a page that looks the same as if it had loaded each image separately, but which only required one HTTP request instead of dozens.

Note this technique is fairly invasive, and can introduce a considerable maintenance burden. Since the images are no longer in separate files, editing even one of them can require generating a new master sprite image and sometimes editing the HTML and CSS using the sprite. Tools for automating CSS sprite generation do exist to help ease their maintenance costs [e.g. http://spriteme.org and http://compass-style.org/help/tutorials/spriting/]. However, it’s worth comparing alternative solutions for reducing HTTP requests associated with images.

Inlining with Data URIs:

It is possible to directly embed the contents of an image file in HTML or CSS, instead of referencing it as a separate file. For example, you can replace this:

<img src=”tiny_image1.png” …>

with this:

<img src=”data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAUA AAAFCAYAAACNbyblAAAAHElEQVQI12P4//8/w38GIAXDIBKE0DHxgljNBAAO 9TXL0Y4OHwAAAABJRU5ErkJggg==” …>

This technique has some advantages over spriting. It involves zero extra HTTP requests, and it also makes the in-lined image immediately available for rendering as soon as the browser discovers it. It does increase the file size of the HTML page or the CSS file that includes it, so it’s definitely not appropriate for large images, but this tradeoff is often worth making for smaller files (e.g. < 4KB). For sites with a bunch of small images, this can be extremely effective for reducing extra requests. As for caching, if the DataURI reference is in a CSS file or HTML page that can be cached, the image is effectively cached as well.

Data URIs have maintenance overhead too. Changing an image requires changing every HTML or CSS file that references it. Also, some older and less capable but still-popular browsers don’t support Data URIs, so some conditional processing is required. This is a primary reason why Data URIs are not as popular as CSS sprites in the web performance world. But with the advent of automated performance optimization services (like Yottaa Site Speed Optimizer), the feature detection and maintenance burden can be removed altogether.

Replacing Images with Pure CSS:

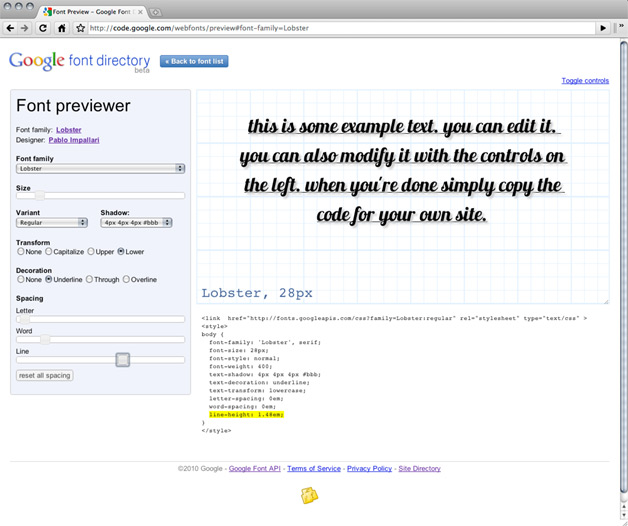

One smart approach to reducing HTTP requests is to forego image files, in favor of styling HTML elements with CSS. CSS can be used to great effect in implementing background colors, borders, buttons, hover effects, styled text, and even vector-scalable icons (via “icon fonts”). Using pure CSS is virtually always better for performance than using image files, and it can help make your site more maintainable too. Of course, cross-browser compatibility issues and “graceful degradation” of a complex design [or better, proper “progressive enhancement” of a simple basic design] are a challenge for every web developer and designer… but there are some fantastic open-source projects and communities that can really help. For example, HTML5Boilerplate and Twitter-Bootstrap provide great starting points for excellent, highly tested templates and user interfaces, and are essentially reference implementations for modern web development best practices. They are a great way to leverage the accumulated knowledge of experts and to learn about best practices for your markup.

Splitting up the page:

Finally, for cases where the number of images can’t easily be reduced with one of these techniques, it’s worth considering breaking up the page itself into smaller pieces. Pagination, with use of Ajax to fetch additional content when the user requests it, is a way to support a smaller initial page with fewer HTTP requests. That is, instead of a massive page triggering large numbers of image requests, have the initial page require just a few such images. Then use pagination to incorporate the additional content and images in the user experience. This isn’t a casual decision — if not implemented carefully, splitting up a page can cause problems for SEO, accessibility, bookmarking and user-friendliness. But by following best practices, these issues can be surmounted, with the desired result of faster pages delivered to your site’s visitors.

To recap:

* Slow web pages impede your website’s goals;

* 90% of a typical web page’s slowness happens after the HTML has been downloaded;

* Reducing the number of HTTP requests triggered by your page is usually the best first step to making your pages faster;

* We reviewed some specific techniques for reducing the number of HTTP requests in a given page

* We noted that automation can ease or remove the maintenance burden for more invasive optimization techniques

Next time I’ll dive into another area of web performance optimization: doing more to leverage the browser’s capabilities, moving toward the client side of the client-server relationship. In the meantime I’m always interested in feedback and others’ thoughts on web performance. Please reply in the comments.

Heidi Voltmer, Adobe’s Director of Product Marketing, spoke with WebProNews and told us that Adobe focused its efforts on 4 main areas with these products. As she explained, the company emphasized speed and performance, improving features in its tools, making sure that the content produced in

Heidi Voltmer, Adobe’s Director of Product Marketing, spoke with WebProNews and told us that Adobe focused its efforts on 4 main areas with these products. As she explained, the company emphasized speed and performance, improving features in its tools, making sure that the content produced in  Staples.com has successfully achieved a consistent mobile experience on all sources of traffic by automatically directing customers to the mobile optimized view of its site regardless of entry point. If a user is searching for paper products via Google, for example, they will be led to an optimized mobile experience of that product page, despite coming from an outside source.

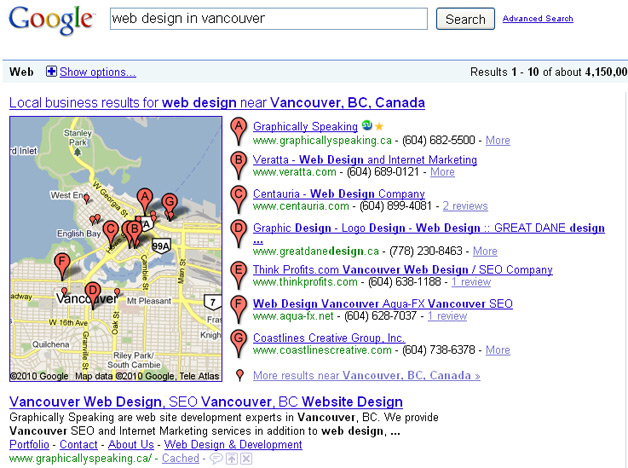

Staples.com has successfully achieved a consistent mobile experience on all sources of traffic by automatically directing customers to the mobile optimized view of its site regardless of entry point. If a user is searching for paper products via Google, for example, they will be led to an optimized mobile experience of that product page, despite coming from an outside source.