Watch the Keynote live at live.dev.webpronews.com.

At SMX West in Santa Clara, the State of the Search Union keynote is taking place today. It’s moderated by Chris Sherman, Executive Editor of Search Engine Land, and features SEL Contributing Editor Vanessa Fox, Google Analytics Evangelist Avinash Kaushik, Yahoo Director of Search Marketing David Roth, and Misty Locke, President, Range Online Media and Chief Strategy Officer of iProspect. The official description for this keynote says:

We’ve just come through the most turbulent period in history for search marketers. Economic disruption, massive algorithm updates, the disappearance of a major player through consolidation with one of its former competitors… these events and others have reshaped the search landscape, creating both challenges and opportunities for search marketers. On this panel we’ve assembled some of the sharpest minds in search to discuss where things stand and where we’re going – you won’t want to miss the insights and recommendations from this group of super-savvy panelists.

I will liveblog the event below, when it starts 9:00am Pacific/12:00pm Eastern (please forgive typos):

Liveblogging starts:

12:00 EST: should be starting anytime now…

12:03 People are taking the stage…getting set up with audio…

12:04 Sherman: An interesting year in search. Often not a whole lot has happened, usually just Google, Google, Google. IN the past year, we’ve seen more radical change than in the past 15 years or so. no sign of change letting up…

12:05: A few questions: key question: when we were here last year, we were in the early stages of an economic meltdown…everybody uncertain….what’s going to happen…search itself was still relatively young/ what was going to happen to industry? so now, how are we doing?

Sound problems…dave says as a search marketer, it gave oppotunities to show stuff and shift strategies. support business goals/shipping landscape. maybe used to optimize for ROI now different metric…shift back to SEO. not just paid side.

Misty: ecommerce still did well in some areas. some clients due to search, record breaking months at times. even in downturn. some marketers utilizing different techniques, driving revenue, and reoccurring customer loyalty. combined search with other marketing channels…flexible companies saw growth.

12:09: Vanessa: Super bowl – with pepsi, they decided to spend their money on social. interesing that some companies think online is a better way to go… one thing from super bowl ads…so many large brands seem to only just now understand that search is important. across the board, it was better than last year as far as big brands in search during super bowl. a lot of work left though.

12:10 Avinash: emboss your brand on somebody’s brain (branding)..search can do this. at the end of the day. when people want to run a branding campaign…..what do you want out of it? one night stand? long term relationshiP? depending on what you want? search is a massively effective way to get to the right kind of people..

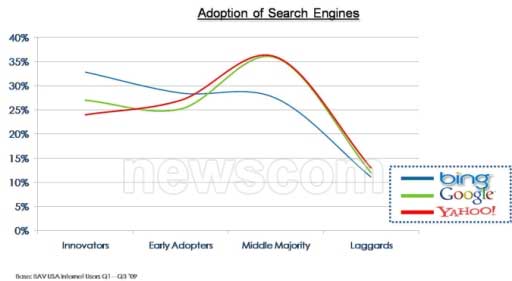

12:13: Microsoft/yahoo deal: Dave: since we got regulatory approval, the integration is on. huge project. lot of resources from both companies. proof will be in the pudding. advertisers start to migrate…i’m yahoo the advertiser. we’re going to continue to innovate around the customer experinece around search. products tah live outside of the index….

12:14 Sherman: anomisity? integration with cultures? Dave: just beginning. large amoutn of resources at yahoo to be moved over and work with MS. will be a portion of yahoo that stays at yahoo. a lot remains to be seen. clearance still to new…people hard at work. everybody on the project understands that this is critical. it absoultely MUST work.

12:15: Misty: clients excited about deal. allows viable number 2. may not drastically change how they upload campaigns, but it does allow to shift focus stategy for bing….60/40 time split between google and bing…excited about volume…reach….

12:16: some changes in showing results will open up some new ways to utilize Bing to advertise. new customers…cashback is a big driver. marketers in geneal have been slow to adopt….

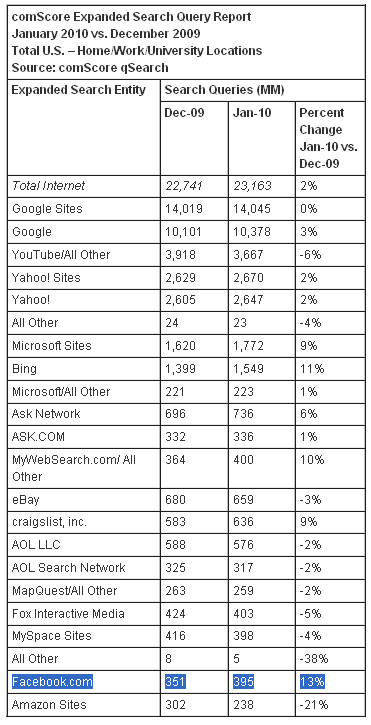

12:17: Sherman: 2 major players: shrinking? couple of giants? no 2 strong players. market growing. Avinash: competition is a good thing. gets everybody to innovate. important to realize…prudent to have portfolio strategy with acquistions. think about content network, youtube ,search, doubleclick. poeple get far too obsessed between microsoft, yahoo, and google. you should have already had a very effective strategy across all search engines.

12:18: Avinash says a blog post of his got way more traffic from bing for the word analytics than google.

12:19: You will find new customers and use dollars more effectively with the portfolio strategy.

12:19 Vanessa: waiting to see how things shake out with integration. searchmonkey? boss? waiting to see how it works out…yahoo did try to make a play for innovation. don’t know how much they’ll be motiviated.. it will be great if they do. reserving judgment.

12:20 Vanessa: hopefully we won’t lose all the yahooness.

12:21: Caffeine: rolled out after holidays? no. still just one data center. sherman talking to vanessa: what impact is caffeine going to have on SEO? is Google going to continue in spirit it always has to provide tools/insight…Vanessa: changes – social, real-time,local, etc. things will ramp up more and more. caffeine specifically. i don’t know it’s going to impact seo that much. just back-end. on their side, a better way to crawl the web. their hope is just to do it better. that’s a benefit for site owners. it’s not a rankings impact, except in more of an indirect way. i don’t think from an SEO perspective, there’s much you need to do. I hoep they keep reaching out. when i was there i loved being able to go out and see what people needed. don’t see a reason why they would stop doing that. they have kept doing it.

Avinash: if every googler woke up and for the entire day, they would answer questions for webmaster, it wouldn’t answre all questions. how can we help people at scale? webmaster tools. a number of tools thag google puts out. just a few more releases for wm tools over the last six months. you’ll continue to see google keep puting out tools that allow this kind of self help at scale. help you make better decisions with search data. orgasmic about amount of data google has put out there in terms of your ability to make better decisions. love access you have to google’s organic search data….insights for search, ad planer….etc.

12:26 with google ad planner you can look at certain demographics and sell to them, but who have done a particular search….target with very relevant display ads using search data. we’ll continue to put tools like this in your hands.

12:27 Sherman: back to dave on microsoft/yahoo: Dave: yahoo’s staying committed in search. in sales side, very experienced in search and display. maintain high touch with big customers. small businesses and self service customers more directly managed on microsoft side.

The goal is to work on the acenter platform and make it the platform of choice on microsoft as well as yahoo.

12:29 Sherman: Yahoo search in dna? Dave: rich data from search, now idea is what can we do with the data we have and the assets to create a better ad for the consumer…behavioral, targeting, etc. teams of people focused on new ad products…

12:30: Sherman: social media – replacing search as the way people interact on the web? i don’t see it, but Facebook has huge stats. what’s happenign with that and what can search marketers do? Vanessa: reporter last week said search is so old news, so why still do search? she said people are still searching and they’re searching more and more. and thy’re going to keep doing so. doesn’t mean don’t think about social media, but it’s about audience. it’s not an either/or thing. misty agrees.

12:32 MIsty: with social you can do traditional things outside of search. boundaries are dissolving between different marketing strategies…

12:33: Misty: social/real-time can drive search volume. marketers will find new ways…it’s a new beginning for search.

12:34: Dave: big companies look for search marketers for expertise in this. Avinash: media loves all stories, facebook/google/twitter/…world’s is all about one thing or the other ….video killed the radio star…it’s not like that here. once said twitter was the dumbest thing on earth….now he uses it and thinks its the coolest thing since sliced bread. important to realize that as you think about different elements, you use them for what they’re good at. the worst strategy is the tv strategy…to shout at peopl…thats why most big brands have pathetic number of followers…they’re not having conversations like danny sullivan.

12:36: Sherman: Managing info overload? how to make advertising legitimate business? will search be absorbed? siloing? Avinash: what we do today is try to influence people….there are many ways now to do that…one emerging way is to have these conversatiosn (is it going to survive)….single greatest reason for google’s success is relevance……….advertisers (madmen style)…that way is dead.

12:37: Avinash: when i work with some of the largest companies….the small ones will use search to get people to raise money, brand awareness…very broad range…Dave: Siloing? exactly the opposite. social media is finally…we used to talk about the promise of engaging with a consumer. social media is the first channels that’s accomplished this. it’s forcing the breaking down of the silos. who should own social media in your organziaton? Who owns the paper in you organization? It’s breaking down the silos.

Misty: everybody wants to own a piece of that because it can be so influential. PR, marketing, brands, search all involved. all can communicate more effectively. in the end we’ll win, because w’re tapping into what the consumer is truly looking for. customers can tell stories for us…make the pieces of our brand that they love more accessible to them…

12:41 Vanessa: we have to go the opposite way of silos. If you think about the data side, if all the areas of marketing can share data, there’s going to be so much more engagment…

12:42: Sherman, people are engaging and being social…but we’re still in the young, naive days in terms of black hat use…unethical marketers using data? What’s gonna happen if gov. steps in and says privacy is an issues…we don’t understand the issues, but will legislate it anyway:

12:43: Misty: thanks the gov. for paying attention to privacy (over healthcare,etc.). Avinash: talks about spam comments in egyptian tombs….spam has been a problem for a very long time and will continue to be…just try to use intelligent ways to surpress it as much as possible and provide incentives to do the right thing.

12:44: Misty: yes there will always be spam….marketers are always going to find a way to use/exploit media…years ago it was different people screaming "you’re a black hat"…now it’s the users who are policing the good/bad. consumers can sniff out the authenticity. the hard part is being so authentic that you don’t get called out by the consumer…

12:46: Dave: regulaton – a fair degree of risk…double edged sword. potential to do unehtical and criminal things. ….mentions recent product from Google that raised privacy concerns (doesn’t blame google necessarily)…not enough understanding in regulation to deal with it….some degree may be needed, but capitol hill’s understanding of the internet is frightening…..education is required…. fears that legislators aren’t up to speed.

12:48: Sherman: shift from US perspecitve to global. what is the opportunity for search marketers to go global, and how do you deal with restictions in other countries like China…..Vanessa: you’ve always had to understand your audience whree they are. it’s not just about geography/translating…understanding the culture. government stuff is a whole other set of issues…starts with understanding the market before you go into it.

12:49: Avinash: there tend to be very sophisticated marketers in other countries. those that are doing web are sophisticated….just not enough of them. many countries extrememly young. opportunities in these countries…like vanessa’s point…you have to truly engage and understand the market. Misty: some other countries are doing more cool and unique things in social….

12:52: Dave: a lot to be learned from other countries. some are leapfrogging…some not even using email, but going straight to Facebook, etc.

12:52: Mobile is here, but maybe it’s not what we thought it was gonna be….we’re about to see things change very drastically…iphone was a game changer…heading very quickly in a direction we didn’t anticipate a few years ago…

12:53 sherman: changes for search marketers? Avinash: story about being with his kids wanting to see something and using his nexus one….used google search and transcribed what he said through voice search, used location, gave him driving directions fast….i wonder as SEOs/marketers, if we’ve thought of this as the use case. sites optimized so they can do these things….for their business….not just on google….i really think…i have to rethink my search strategy…not even a fragment of marketers in search business are thinking about that as search. encourages marketers to think of mobile like that…not just a WAP version of a web page.

Misty: are all your local listings up to date, ready for navigation, prodcuts easy to access? then think of advertising things…usability of site in mobile…

12:56: Vanessa: Avinash is right. ubiquity in mobile opens door for a lot of new opportunities. it’s not that new ways of searching will replace google, but it’s just presenting different ways. people don’t think about using some things as searching, but it is….urbanspoon, etc. Look at where the new opportunities are.

12:57 Sherman and audience thanks panel. It’s over.

"Making sure well-written articles get found online involves continuous hard work and search engine knowledge," says Berger. "We know that in order to help our writers get their stories found, we need to increase our expertise in the area of search." That’s why the company just hired search strategist Aaron Bradley as its SEO Director to implement new SEO tactics across its articles.

"Making sure well-written articles get found online involves continuous hard work and search engine knowledge," says Berger. "We know that in order to help our writers get their stories found, we need to increase our expertise in the area of search." That’s why the company just hired search strategist Aaron Bradley as its SEO Director to implement new SEO tactics across its articles.