Google has posted a new Webmaster Help video. As usual, Head of Web Spam Matt Cutts has answered a user-submitted question. The question is:

“When you notice a drastic drop in your rankings and traffic from Google, what process would you take for diagnosing the issue?”

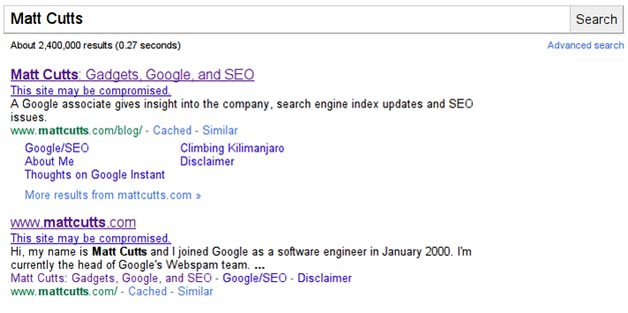

“One thing I would do very early on, is I would do ‘site:mydomain.com’ and figure out are you completely not showing up in Google or do parts of your site show up in Google?” he begins. “That’s also a really good way to find out whether you are partially indexed. Or if you don’t see a snippet, then maybe you had a robots.txt that blocked us from crawling. So we might see a reference to that page, and we might return something that we were able to see when we saw a link to that page, but we weren’t able to see the page itself or weren’t able to fetch it.”

“You might also notice in the search results if we think that you’re hacked or have malware, then we might have a warning there,” he adds. “And that could, of course, lead to a drop in traffic if people see that and decide not to go to the hacked site or the site that has malware.”

Then it’s on to Webmaster Tools.

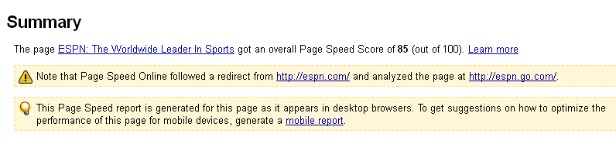

“The next place I’d look is the webmaster console,” says Cutts. “Google.com/webmasters prove that you control or own the site in a variety of ways. And we’re doing even more messages than we used to do. Not just things like hidden text, park domains, doorway pages. Actively quite a few different types of messages that we’re publishing now, and when we think there’s been a violation of our quality guidelines. If you don’t see any particular issue or message listed there, then you might consider going to the Webmaster Forum.”

“As part of that, you might end up asking yourself, is this affecting just my site or a lot of other people? If it’s just your site, then it might be that we thought that your site violated our guidelines, or of course, it could be a server-related issue or an issue on your site, of course, on your side,” he says. “But it could also be an algorithmic change. And so if a bunch of people are all seeing a particular change, then it might be more likely to be something due to an algorithm.”

We’ve certainly seen plenty of that lately, and will likely see more tweaks to Panda for the time being, based on this recent tweet from Cutts:

Weather report: expect some Panda-related flux in the next few weeks, but will have less impact than previous updates (~2%).

“You can also check other search engines, because if other search engines aren’t listing you, that’s a pretty good way to say, well, maybe the problem is on my side. So maybe I’ve deployed some test server, and maybe it had a robots.txt or a noindex so that people wouldn’t see the test server, and then you pushed it live and forgot to remove the noindex,” Cutts continues in the video. “You can also do Fetch as Googlebot. That’s another method. That’s also in our Google Webmaster console. And what that lets you do is send out Googlebot and actually retrieve a page and show you what it fetched. And sometimes you’ll be surprised. It could be hacked or things along those lines, or people could have added a noindex tag, or a rel=canonical that pointed to a hacker’s page.”

“We’ve also seen a few people who, for whatever reason, were cloaking, and did it wrong, and shot themselves in the foot,” he notes. “And so they were trying to cloak, and instead they returned normal content to users and completely empty content to Googlebot.”

“Certainly if you’ve changed your site, your hosting, if you’ve revamped your design, a lot of that can also cause things,” he says. “So you want to look at if there’s any major thing you’ve changed on your side, whether it be a DNS, host name, anything along those lines around the same time. That can definitely account for things. If you deployed something that’s really sophisticated AJAX, maybe the search engines are[n’t] quite able to crawl that and figure things out.

Cutts, of course advises filling out a reconsideration request, once you think you’ve figured the issue.

A tip of the hat goes to Josh Constine at InsideFacebook for

A tip of the hat goes to Josh Constine at InsideFacebook for