Yahoo has begun testing organic and paid search listings from Microsoft. Up to 25% of its search traffic in the U.S. may see organic listings from Microsoft, and up to 3.5% may see paid listings from Microsoft adCenter. I guess you could say that the early stages of the Search Alliance’s transition have begun.

Will you place more emphasis on Bing optimization as it integrates with Yahoo Search? Let us know.

"The primary change for these tests is that the listings are coming from Microsoft," says Yahoo’s VP of Search Product Operations, Kartik Ramakrishnan. "However, the overall page should look the same as the Yahoo! Search you’re used to – with rich content and unique tools and features from Yahoo!. If you happen to fall into our tests, you might also notice some differences in how we’re displaying select search results due to a variety of product configurations we are testing."

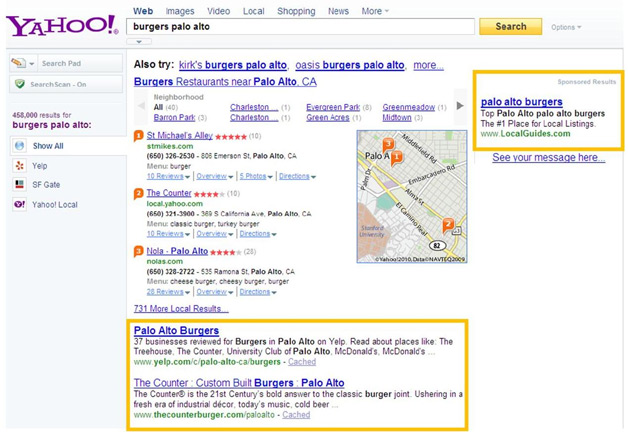

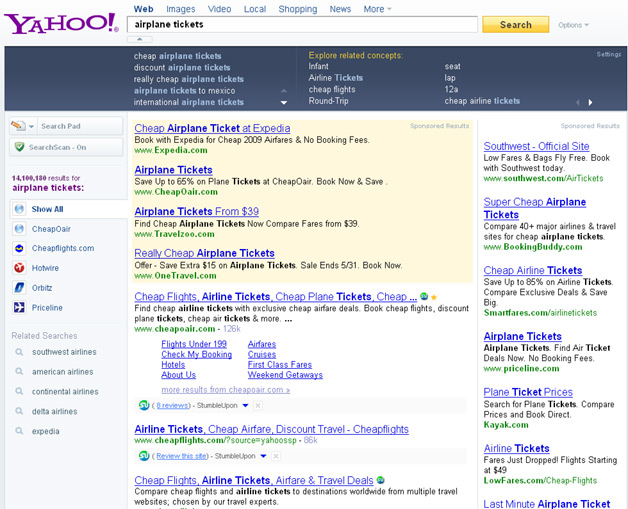

Yahoo provides the following example, in which the Microsoft-powered parts are represented by the boxes:

As far as SEO is concerned, the Yahoo Search Marketing Team provides the following tips for organic search:

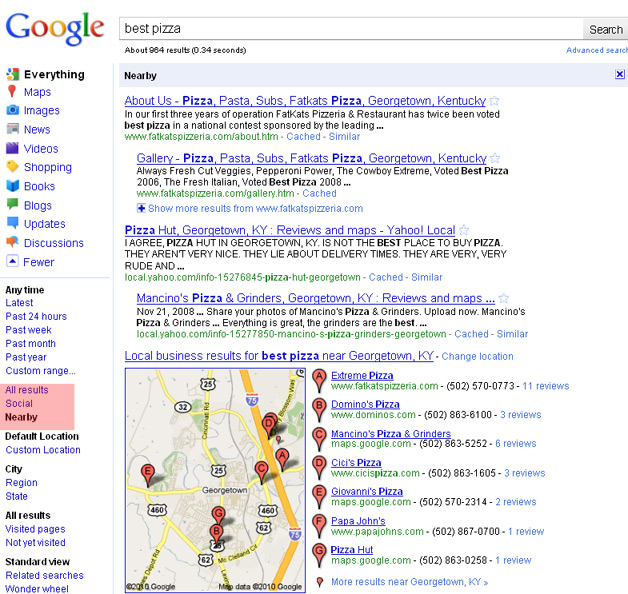

- Compare your organic search rankings on Yahoo! Search and Bing for the keywords that work best for you.

- Decide if you’d like to modify your paid search campaigns to compensate for any changes in organic referrals that you anticipate.

- Review the Bing webmaster tools and optimize your website for the Microsoft platform crawler, as Bing listings will be displayed for approximately 30% of search queries after this change, according to comScore.

Microsoft’s Satya Nadella also says that "now is a good time for you to review your crawl policies in your robots.txt and ensure that you have identical polices for the msnbot/Bingbot and Yahoo’s bots. Just to note, you should not see an increase in bingbot traffic as a result of the transition."

The Bingbot is designed to crawl non-optimized sties more easily. The new Bingbot will replace the existing msnbot in October. More on this here.

Also note that the new Bing Webmaster Tools experience is live. This has been completely redone with a bunch of new features (and more features to come). Bing Webmaster Tools Senior Product Manager Anthony M. Garcia summarizes:

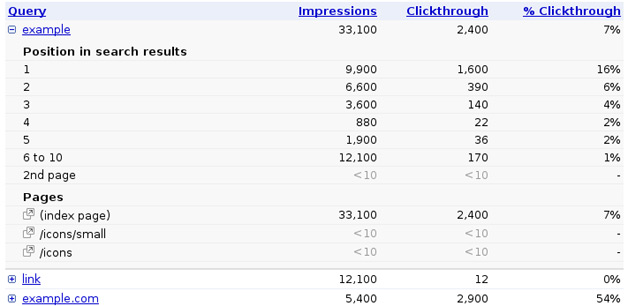

The redesigned Bing Webmaster Tools provide you a simplified, more intuitive experience focused on three key areas: crawl, index and traffic. New features, such as Index Explorer and Submit URLs, provide a more comprehensive view as well as better control over how Bing crawls and indexes your sites. Index Explorer gives you unprecedented access to browse through the Bing index in order to verify which of your directories and pages have been included. Submit URLs gives you the ability to signal which URLs Bing should add to the index. Other new features include: Crawl Issues to view details on redirects, malware, and exclusions encountered while crawling sites; and Block URLs to prevent specific URLs from appearing in Bing search engine results pages. In addition, the new tools take advantage of Microsoft Silverlight 4 to deliver rich charting functionality that will help you quickly analyze up to six months of crawling, indexing, and traffic data. That means more transparency and more control to help you make decisions, which optimize your sites for Bing.

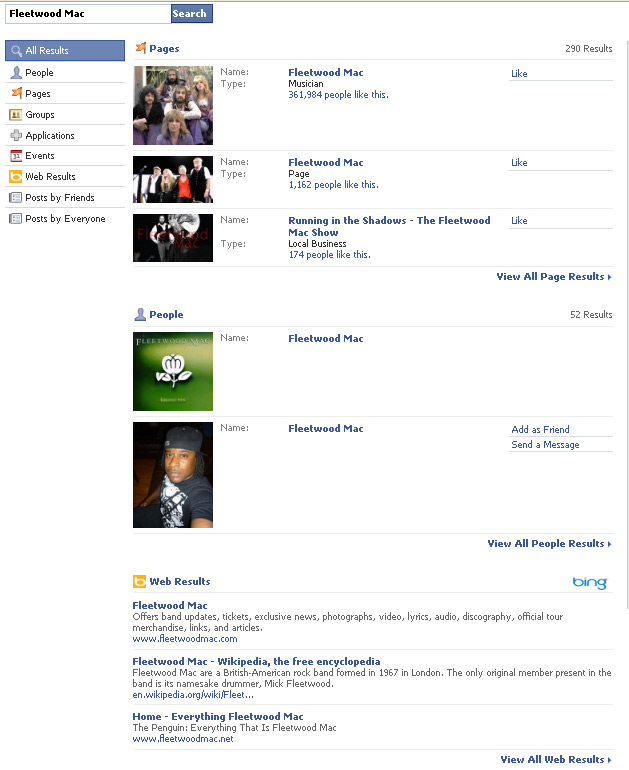

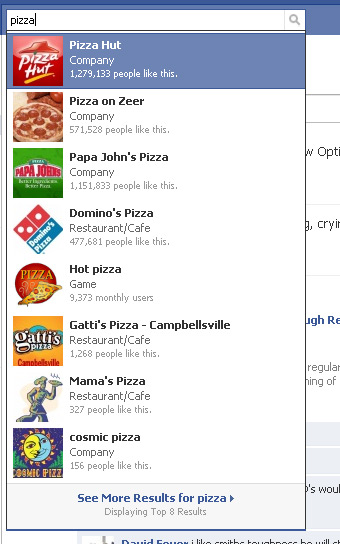

WebProNews spoke with Janet Driscoll Miller of Search Mojo out at SMX a while back. She had presented on the topic of Bing SEO vs. Organic SEO. As she notes, some businesses actually see better results from Bing than they do from Google, and when Yahoo starts fully using Bing for search, Bing’s share of the search market is going to grow dramatically (it also powers search in Facebook, let’s not forget).

Yahoo will be integrating Microsoft’s mobile organic and paid listings in the U.S. and Canada in the coming months. The company anticipates that U.S. and Canada organic listings in both the desktop and mobile versions of its search will be fully powered by Microsoft as early as August or September. This of course depends on how the testing goes.

Yahoo and Microsoft have created new joint editorial guidelines for advertisers that will become effective in early August. These can be found here.

As we’ve discussed, Bing optimization is about to get more important, and now the time has come to really look at your Bing strategy if you’ve not already been doing so.

Are you prepared for the transition? Comment here.

"There are many benefits to employing these content management solutions,"

"There are many benefits to employing these content management solutions,"  "The patent presents a couple of assumptions about how mouse pointer movements can be interpreted," explains Bill Slawski at SEO by the Sea, who presents

"The patent presents a couple of assumptions about how mouse pointer movements can be interpreted," explains Bill Slawski at SEO by the Sea, who presents  Rick DeJarnette has

Rick DeJarnette has

Google notes that humor and other link-bait tactics can work for the short term, but does not recommend counting such tactics. "It’s important to clarify that any legitimate link building strategy is a long-term effort," says Google Search Quality Strategist Kaspar Szymanski. "There are those who advocate for short-lived, often spammy methods, but these are not advisable if you care for your site’s reputation. Buying PageRank-passing links or randomly exchanging links are the worst ways of attempting to gather links and they’re likely to have no positive impact on your site’s performance over time. If your site’s visibility in the Google index is important to you it’s best to avoid them." (emphasis added)

Google notes that humor and other link-bait tactics can work for the short term, but does not recommend counting such tactics. "It’s important to clarify that any legitimate link building strategy is a long-term effort," says Google Search Quality Strategist Kaspar Szymanski. "There are those who advocate for short-lived, often spammy methods, but these are not advisable if you care for your site’s reputation. Buying PageRank-passing links or randomly exchanging links are the worst ways of attempting to gather links and they’re likely to have no positive impact on your site’s performance over time. If your site’s visibility in the Google index is important to you it’s best to avoid them." (emphasis added)

Search Engine Land contributing editor Greg Sterling makes some

Search Engine Land contributing editor Greg Sterling makes some