So the controversial Google Panda update is now live throughout the world (in the English language). Since Google’s announcement about this, data has come out looking at some of the winners and losers (in terms of search visibility) from both SearchMetrics and Sistrix. Hopefully we can all learn from this experience, as search marketing continues to be critical to online success.

Has Panda been good to you? Comment here.

Throughout this article, keep a couple of things in mind. The SearchMetrics and Sistrix data are limited to the UK and Europe. The Panda update has been rolled out globally (in English). It seems fair to assume that while the numbers may not match exactly, there are likely parallel trends in visibility gain or loss in other countries’ versions of Google. So, while the numbers are interesting to look at, they’re not representative of the entire picture – more a general view.

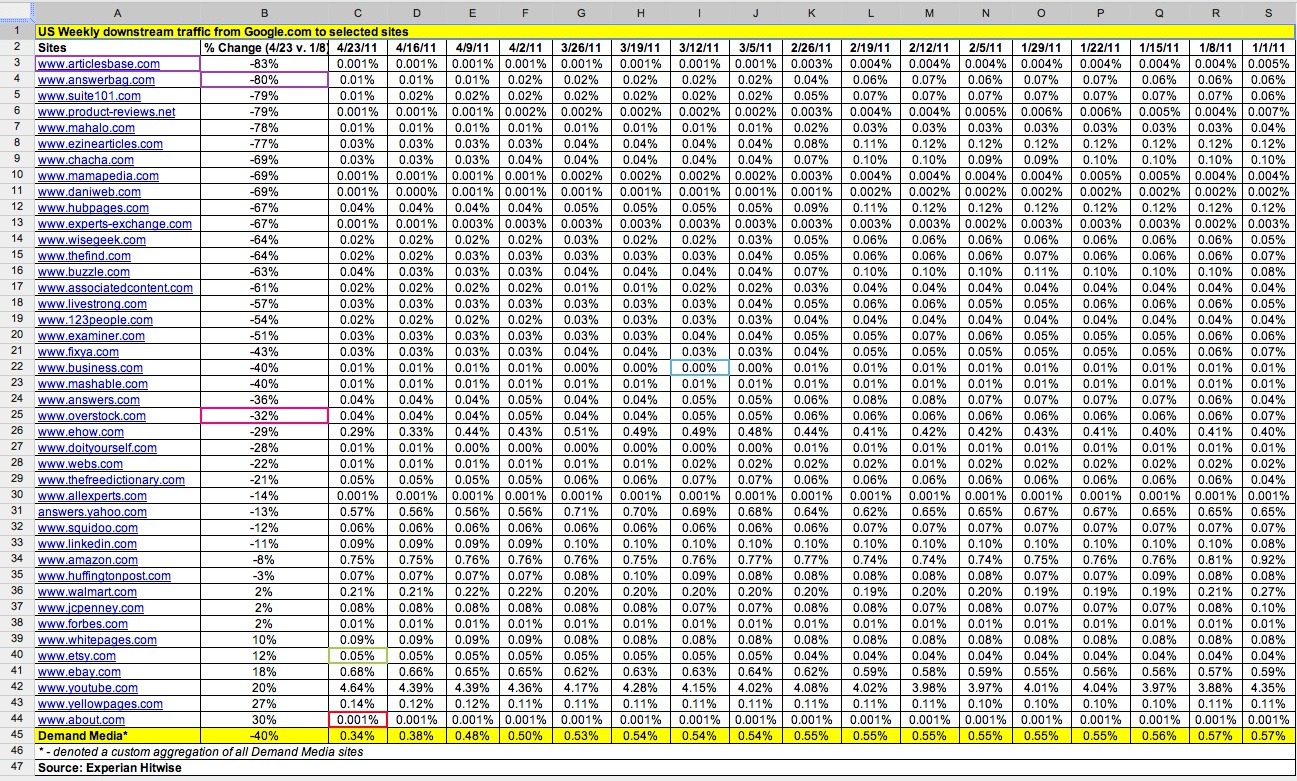

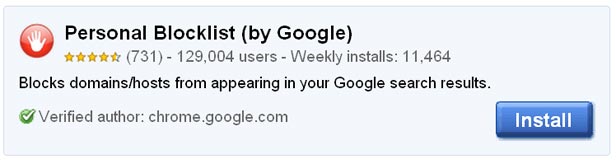

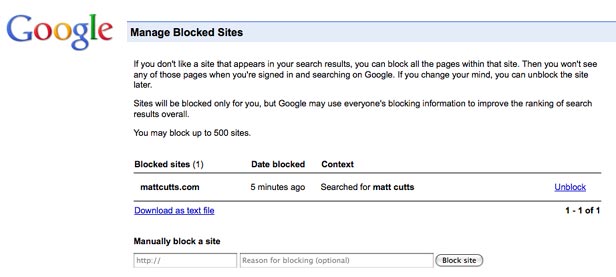

Also keep in mind that Google has made new adjustments to its algorithm in the U.S. In the announcement, they said the new tweaks would affect 2% of queries (in comparison to 12% for the initial Panda update). Also note that they’re now taking into consideration the domain-blocking feature in “high confidence” situations, as the company describes it. So that may very well have had an impact on some of these sites in the U.S.

As there were the first time around, the new global version of Panda has brought with it numerous interesting side stories. First, let’s look at some noteworthy sites that were negatively impacted by the update.

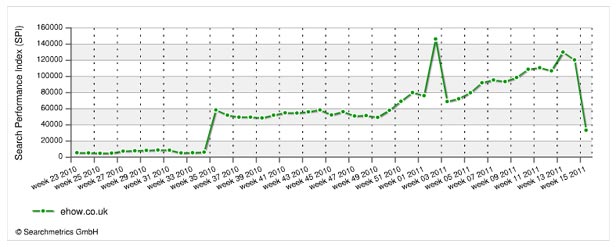

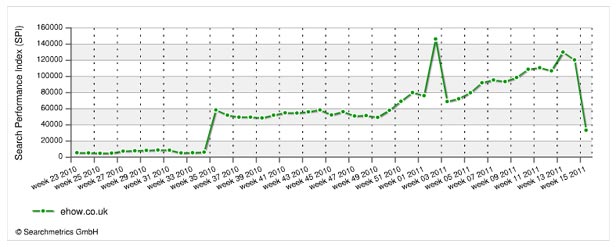

eHow

eHow managed to escape the U.S. roll-out of the Panda update, and actually come out ahead, but the site’s luck appears to have changed, based on the SearchMetrics/Sistrix data. According to the SearchMetrics data, eHow.co.uk took a 72,30% hit in visibility. eHow.com took a 53,46% hit. Sistrix has eHow.co.uk as its top loser with a -84% change.

In the U.S. after looking at some queries we tested before, it does appear that eHow has lost some positioning in some areas – most notably the “level 4 brain cancer” example we’ve often referenced to make a point about Google’s placement of non-authoritative content over more authoritative content for health terms.

EzineArticles

EzineArticles, which was heavily impacted the first time around got nailed again, based on the data. SearchMetrics, looking at UK search data has EzineArticles with a drop in search visibility of as much as 93.69%. Sistrix, looking at Europe, has the site as its number 2 loser with a change of -78%.

This is after an apparently rigorous focus on quality and guidelines following the U.S. update.

Mahalo

You may recall that after the U.S. update, Mahalo announced a 10% reduction in staff. “All we can do is put our heads down and continue to make better and better content,” CEO Jason Calacanis told us at the time. “If we do our job I’m certain the algorithm will treat us fairly in the long-term.”

Since the global roll-out, we’ve seen not indications from Calcanis or Mahalo that more layoffs are happening. “We were impacted starting on February 24th and haven’t seen a significant change up or down since then,” he told us.

Still, the SearchMetrics UK has Mahalo at a 81.05% decrease in search visibility. The Sistrix data has the site at a -77% change.

“We support Google’s effort to make better search results and continue to build only expert-driven content,” Calcanis said. “This means any videos and text we make has a credentialed expert with seven years or 10,000 hours of experience (a la Malcolm Gladwell).”

HubPages

HubPages was hit the first time, and has been hit again. SearchMetrics has the site at -85,72%. Sistrix has it at -72%.

An interesting thing about HubPages is that a Googler (from the AdSense department) recently did a guest blog post on the HubPages blog, telling HubPages writers how to improve their content for AdSense.

Suite101

Suite101, another one of the biggest losers in the initial update, was even called out by Google as an example of what they were targeting. “I feel pretty confident about the algorithm on Suite 101,” Matt Cutts said in a Wired interview.

Suite101 CEO Peter Berger followed that up with an open letter to Matt Cutts.

This time Sixtrix has Suite101 at a -79% change, and SearchMetrics has it at -95,39%. Berger told us this week, “As expected by Google and us, the international impact is noticeably smaller.”

Xomba

Xomba.com had a -88,06% change, according to the SearchMetrics data. They also had their AdSense ads temporarily taken away entirely. This doesn’t appear to be related to the update in anyway, but is still a terrible inconvenience that got the company and its users a little bit frantic at a time while their traffic was taking a hit too.

Google ended up responding and saying they’d have their ads back soon. All the while, Google still links to Xomba on its help page for “How do I use AdSense with my CMS?”

A lot of price comparison sites were also negatively impacted. In the UK, Ciao.co.uk was a big loser with -93,83% according to SearchMetrics.

You can see SearchMetrics’ entire list of losers here.

The Winners

As there are plenty of losers in this update, somebody has to win right? The big winners appear to be Google, Google’s competitors, news sites, blogs, and video sites. A few porn sites were sprinkled into the list as well.

All winner data is based on the SearchMetrics data of top 101 winners.

Google properties positively impacted:

– youtube.com gained 18.93% in visibility.

– google.com gained 6.14% in visibility.

– google.co.uk gained 3.99% visibility.

– blogspot.com (Blogger) gained gained 22.8% visibility.

– android.com gained 33.92% in visibility.

Google competitors positively impacted:

– yahoo.com increased 9.47%

– apple.com increased 15.19%

– facebook.com increased 9.14%

– dailymotion.com increasd 17.80

– wordpress.com increased 18.62

– msn.com increased 8.13%

– metacafe.com increased 6.45%

– vimeo.com increased 18.85%

– flickr.com increased 12.39%

– typepad.com increased 43.86%

– tripadvisor.co.uk increased 7.81%

– mozilla.org increased 19.44%

– windowslive.co.uk increased 29.46%

– live.com increased 6.62%

News sites, blogs, and video sites positively impacted include (but are not limited to):

– youtube.com – 18.93% visibility increase

– telegraph.co.uk 16.98% visibility increase

– guardian.co.uk – 9.73% visibility increase

– bbc.co.uk – 5.46% visibility increase

– yahoo.com – 9.47% visibility increase

– blogspot.com (Blogger) – 22.8% visibility increase

– dailymail.co.uk – 12.72% visibility increase

– dailymotion – 17.8% visibility increase

– ft.com – 16.17% visibility increase

– independent.co.uk – 21.53% visbility increase

– readwriteweb.com – 152.46% visbility increase

– thegregister.co.uk – 13.47% visibility increase

– itv.com – 22.38% visibility increase

– cnet.com – 14.21% visibility increase

– mirror.co.uk – 24.87% visibility increase

– mashable.com – 22.61% visibility increase

– wordpress.com – 18.62% visibility increase

– techcrunch.com – 40.72% visibility increase

– time.com – 55.24% visibility increase

– metacafe.com – 6.45% visibility increase

– reuters.com – 36.82% visibility increase

– thenextweb.com – 3.85% visibility increase

– zdnet.co.uk – 34.04% visibility increase

– vimeo.com – 18.85% visibility increase

– typepad.com – 43.86% visibility increase

Bounce Rate Significance?

SearchMetrics sees a pattern in the winners, in that time spent on site is a major factor. “Compare the winners against the losers,” SearchMetrics CTO and Co-Founder Marcus Tober tells WebProNews. “It seems that all the loser sites are sites with a high bouce rate and a less time on site ratio. Price comparison sites are nothing more than a search engine for products. If you click on a product you ‘bounce’ to the merchant. So if you come from Google to ciao.co.uk listing page, than you click on an interesting product with a good price and you leave the page. On Voucher sites it is the same. And on content farms like ehow you read the article and mostly bounce back to Google or you click Adsense.”

“And on the winners are more trusted sources where users browse and look for more information,” he continues. “Where the time on site is high and the page impressions per visit are also high. Google’s ambition is to give the user the best search experience. That’s why they prefer pages with high trust, good content and sites that showed in the past that users liked them.”

“This conclusion is the correlation of an analysis of many Google updates from the last 6 months,” he adds. “Also the Panda US and UK updates.”

The Future

It doesn’t look like Panda is slowing down search marketing ambition:

Well, SEO isn’t getting any easier, so that makes sense. Beyond the Panda update, it’s not like Google is going to slow down in its algorithm tweaks. As webmasters and publishers get used to the latest changes, more continue to pour out.

In an AFP interview, Google’s Scott Huffman says his team tested “many more than” 6,000 changes to the search engine in 2010 alone. 500 of them, he said, went on to become permanent changes. What are the odds that number will be lower in 2011?

Huffman also told the AFP that plenty of improvements are ahead, including those related to understanding inferences from different languages.

And let’s not forget the Google +1 button, recently announced. Google said flat out that the information would go on to be used as a ranking signal. Google specifically said it will “start to look at +1s as one of the many signals we use to determine a page’s relevance and ranking, including social signals from other services. For +1s, as with any new ranking signal, we’ll be starting carefully and learning how those signals affect search quality over time.”

Make friends with Google’s webmaster guidelines.

Have you been impacted by the global roll-out of Panda? For better or worse? Let us know in the comments.

Users can measure paid and organic search rankings, onsite engagement, or conversions in one report, use performance of natural search to adjust their paid search bids, gain insight to help in making keyword bidding decisions, etc.

Users can measure paid and organic search rankings, onsite engagement, or conversions in one report, use performance of natural search to adjust their paid search bids, gain insight to help in making keyword bidding decisions, etc.