You’d probably laugh if some higher up at Little Caesars posted an epic diatribe blasting the state of modern pizza. You might scratch your head if this exec, let’s call him Bob, ranted about lesser quality pizza, and how the only thing we get anymore is the lowest common denominator pizza–the cheapest, flashiest, most easily digestible pizza that requires the least amount of effort. You’d most likely stare at Bob, with a look of unadulterated bewilderment as he lamented the dumbing down of pizza to its current easily palatable, but otherwise unfulfilling form.

You’d say But Bob, you’re part of the problem! Bob, you may just be the whole damn problem!

Mike Hudack is a Director of Product Management at Facebook. Recently, he posted a 461-word teardown of the current state of media. Below, I’ll post the whole thing–but to sum it up, it goes a little something like this:

All we have these days is lesser quality journalism. The only thing we get anymore is the lowest common denominator journalism–the cheapest, flashiest, most easily digestible journalism that requires the least amount of effort. Journalism has been dumbed down to its current easily palatable, but otherwise unfulfilling form. We used to have live reporting from Baghdad on CNN, and now all we see are BuzzFeed listicles.

How much do you think Facebook contributes to the issues Hudack brings up? Let us know in the comments.

Mike Hudack is not wrong about this–being totally off base is not what makes this post incredible in every single way. What does that is the fact that he works for Facebook.

It’s hard to tell who’s to blame. But someone should fix this shit., he says.

I’m going to need someone who studies cognitive dissonance to explain this to me before I blow a fuse trying to wrap my temperamental little writer brain around it.

Here’s the entire post, for context:

Please allow me to rant for a moment about the state of the media.

It’s well known that CNN has gone from the network of Bernie Shaw, John Holliman, and Peter Arnett reporting live from Baghdad in 1991 to the network of kidnapped white girls. Our nation’s newspapers have, with the exception of The New York Times, Washington Post and The Wall Street Journal been almost entirely hollowed out. They are ghosts in a shell.

Evening newscasts are jokes, and copycat television newsmagazines have turned into tabloids — “OK” rather than Time. 60 Minutes lives on, suffering only the occasional scandal. More young Americans get their news from The Daily Show than from Brokaw’s replacement. Can you even name Brokaw’s replacement? I don’t think I can.

Meet the Press has become a joke since David Gregory took over. We’ll probably never get another Tim Russert. And of course Fox News and msnbc care more about telling their viewers what they want to hear than informing the national conversation in any meaningful way.

And so we turn to the Internet for our salvation. We could have gotten it in The Huffington Post but we didn’t. We could have gotten it in BuzzFeed, but it turns out that BuzzFeed’s homepage is like CNN’s but only more so. Listicles of the “28 young couples you know” replace the kidnapped white girl. Same thing, different demographics.

We kind of get it from VICE. In between the salacious articles about Atlanta strip clubs we get the occasional real reporting from North Korea or Donetsk. We celebrate these acts of journalistic bravery specifically because they are today so rare. VICE is so gonzo that it’s willing to do real journalism in actually dangerous areas! VICE is the savior of news!

And we come to Ezra Klein. The great Ezra Klein of Wapo and msnbc. The man who, while a partisan, does not try to keep his own set of facts. He founded Vox. Personally I hoped that we would find a new home for serious journalism in a format that felt Internet-native and natural to people who grew up interacting with screens instead of just watching them from couches with bags of popcorn and a beer to keep their hands busy.

And instead they write stupid stories about how you should wash your jeans instead of freezing them. To be fair their top headline right now is “How a bill made it through the worst Congress ever.” Which is better than “you can’t clean your jeans by freezing them.”

The jeans story is their most read story today. Followed by “What microsoft doesn’t get about tablets” and “Is ’17 People’ really the best West Wing episode?”

It’s hard to tell who’s to blame. But someone should fix this shit.

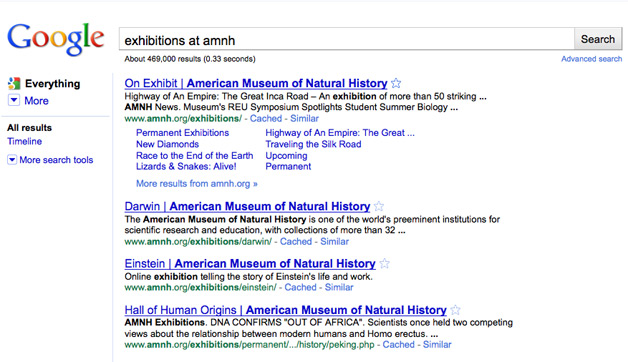

Take a look at your Facebook news feed real quick. What do you see? Do you see dozens of links to long-form stories about veterans affairs or the current unrest in Ukraine? Do you see links to thought pieces on pathways to renewable energy? Do you see stories about immigrants, wages, and economic disparity?

No, of course you don’t. You see “27 Types of Poop, and What They Really Mean.”

Why? Because that’s what works on Facebook. If you’re a website the produces original content, you rely (to a varying degree) on posts “going viral.” How do things “go viral”? They get shared on Facebook. And what kind of content gets shared on Facebook? Poop lists.

Sure, this is sort of an indictment of your friends and the human race in general. Poop lists are what people want to see. But more importantly, this is about Facebook and the complex, carefully guarded news feed algorithms that govern what we all see and how they choose to reward the very content that has Mike Hudack so incensed.

“Hey, Mike, I just sent you a tweetstorm, but let me reproduce it here: My perception is that Facebook is *the* major factor in almost every trend you identified. I’m not saying this as a hater, but if you asked most people in media why we do these stories, they’d say, ‘They work on Facebook.’ And your own CEO has even provided an explanation for the phenomenon with his famed quote, ‘A squirrel dying in front of your house may be more relevant to your interests right now than people dying in Africa.’ This is not to say we (the (digital) media) don’t have our own pathologies, but Google and Facebook’s social and algorithmic influence dominate the ecology of our world,” says The Atlantic’s Alexis Madrigal in a reply to his rant.

“And we (speaking for ALL THE MEDIA) would love to talk with Facebook about how we can do more substantive stuff and be rewarded. We really would. It’s all we ever talk about when we get together for beers and to complain about our industry and careers.”

Or, more succinctly, as Valleywag’s Sam Biddle put it: “Sorry, but Facebook is why BuzzFeed is the way it is.”

I think we can all agree that it would go a long way to solving the issues that Hudack laments if Facebook would lead the charge in promoting high-quality content.

Here’s the thing–they might not be able to, even if they wanted to.

If you own a Facebook page, you’re all-too familiar with their big News Feed algorithm changes that took place back in December. Whatever Facebook tweaked, it slashed organic reach for tons of pages. Of course, many argued that the point of all this was to force people to “pay to play,” or boost their posts through paid reach. Facebook vehemently denied this, saying that it was all about pushing higher quality content.

“Our surveys show that on average people prefer links to high quality articles about current events, their favorite sports team or shared interests, to the latest meme. Starting soon, we’ll be doing a better job of distinguishing between a high quality article on a website versus a meme photo hosted somewhere other than Facebook when people click on those stories on mobile. This means that high quality articles you or others read may show up a bit more prominently in your News Feed, and meme photos may show up a bit less prominently,” said Engineering Manager Varun Kacholia at the time.

Ok, so if Facebook is committed to pushing more high quality content, then why are we all still seeing so many Poop Lists?

Turns out, Facebook’s algorithms are simply bad at determining quality.

WebProNews’ own Chris Crum explained this beautifully back in February, while discussing why BuzzFeed is able to get so much Facebook traffic when you can’t:

What this is really about is likely Facebook’s shockingly unsophisticated methods for determining quality. The company has basically said as much. Peter Kafka at All Things D (now at Re/code) published an interview with Facebook News Feed manager Lars Backstrom right after the update was announced.

He said flat out, “Right now, it’s mostly oriented around the source. As we refine our approaches, we’ll start distinguishing more and more between different types of content. But, for right now, when we think about how we identify “high quality,” it’s mostly at the source level.”

That’s what it comes down to, it seems. If your site has managed to make the cut at the source level for Facebook, you should be good regardless of how many GIF lists you have in comparison to journalistic stories. It would seem that BuzzFeed had already done enough to be considered a viable source by Facebook, while others who have suffered major traffic hits had not. In other words, Facebook is playing favorites, and the list of favorites is an unknown.

Just to make this point clear, Kafka asked in that interview, “So something that comes from publisher X, you might consider high quality, and if it comes from publishers Y, it’s low quality?”

Backstrom’s answer was simply, “Yes.”

So as long as BuzzFeed is publisher X, it can post as many poop lists as it wants with no repercussions, apparently. It’s already white-listed. Meanwhile, you can be publisher Y and break the news about the next natural disaster, and it means nothing. At least not until Facebook’s methods get more sophisticated.

Not only that, but Hudack’s sharing of the Vox.com article that apparently served as the catalyst for his irony-soaked post is likely part of the problem as well. Here, he shares the article to talk shit about it, but does it really matter why he shared it? He shared it. It’s the circle of strife.

Hudack has responded to many comments on his post, saying that he acknowledges Facebook could do more to stop “sending traffic to shitty listicles,” and that there are people within the company that would like to see a change:

This thread is awesome. Really awesome. I don’t work on Newsfeed or trending topics, so it’s hard for me to speak authoritatively about their role in the decline of media. But I’d argue that 20/20 turned into “OK” before Facebook was really a thing, and CNN stopped being the network reporting live from Baghdad before Facebook became a leading source of referral traffic for the Internet,” he says.

“Is Facebook helping or hurting? I don’t honestly know. You guys are right to point out that Facebook sends a lot of traffic to shitty listicles. But the relationship is tautological, isn’t it? People produce shitty listicles because they’re able to get people to click on them. People click on them so people produce shitty listicles. It’s not the listicles I mind so much as the lack of real, ground-breaking and courageous reporting that feels native to the medium. Produce that in a way that people want to read and I’m confident that Facebook and Google and Twitter will send traffic to it.

And, to be clear, there are many people at Facebook who would like to be part of the solution and not just part of the problem. I’m sure that we’re all open to ideas for how we could improve the product to encourage the distribution of better quality journalism. I, for one, am all ears.”

He also acknowledges a “filter bias”:

“[C]ulture matters. I also want to emphasize that I don’t work on Feed or Trending Topics, and that I speak for myself and not the company or the folks who work hard to make Feed and Trending Topics better. I know those guys, but I can’t speak for them. I understand that there are such things as filter bias, and I think that you’re right that all of the players in the ecosystem can get better at this.”

In the end, however, Hudack says his comments were about about a true degradation in reporting quality.

“What I’m seeing, though, is a general degradation of real reporting. Perhaps it’s easier to imagine me as an aging newspaperman looking around the newsroom saying “Where did the Woodwards and Bernsteins go?”

Of course, no one entity is to blame for the fact that your newsfeed is littered with listicles and articles about freezing your jeans. But you can’t advertise $5 Hot-n-Ready pizzas and then bitch when pizza everywhere tastes like cardboard, ketchup, and Velveeta.

What do you think? Is Facebook to blame? What can be done about it? Would it help if Facebook were more open about how their news feed algorithms work, or do they simply need to get better at filtering high-quality content? Let us know in the comments.

Image via Facebook Menlo Park, Facebook