Google has posted a thought-provoking piece to the Official Google Blog, discussing at length, Google’s system for understanding synonyms in search. As author Steven Baker says, "An irony of computer science is that tasks humans struggle with can be performed easily by computer programs, but tasks humans can perform effortlessly remain difficult for computers."

Google considers understanding human language to be one of the hardest problems in artificial intelligence, and the key to returning the best possible search results. While it is far from perfect now, Google has invested a great deal of time into this (5 years of research to be exact).

To cut to the chase, here are some things pertaining to Google’s handling of synonyms that you should keep in mind:

1. Google contantly monitors its system for handling synonyms with regard to search result relevance.

2. Google says synonyms affect 70% of user searches across over 100 languages.

3. For every 50 queries where synonyms significantly improve search results, Google has only found one "truly bad" synonym.

4. Google does not normally fix bad synonyms by hand, but rather makes changes to its algorithms to try and correct the problem. "We hope it will be fixed automatically in some future changes," Baker says.

5. Google has recently made a change to how its synonyms are displayed: in SERP snippets, terms are bolded, just like the actual words you searched for.

6. Google uses "many techniques" to extract synonyms. Its systems analyze perabytes of data to build "an intricate understanding of what words can mean in different contexts"

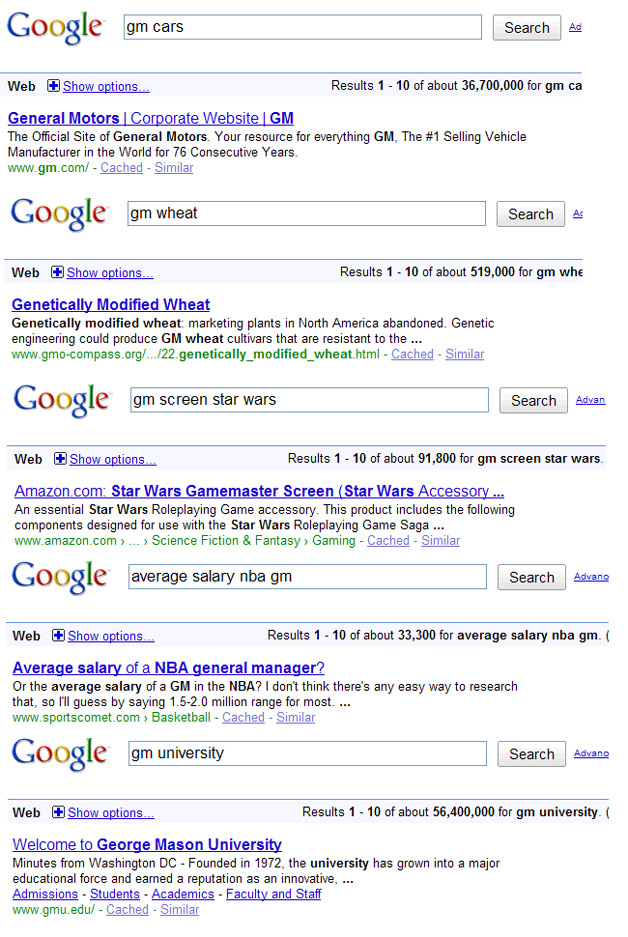

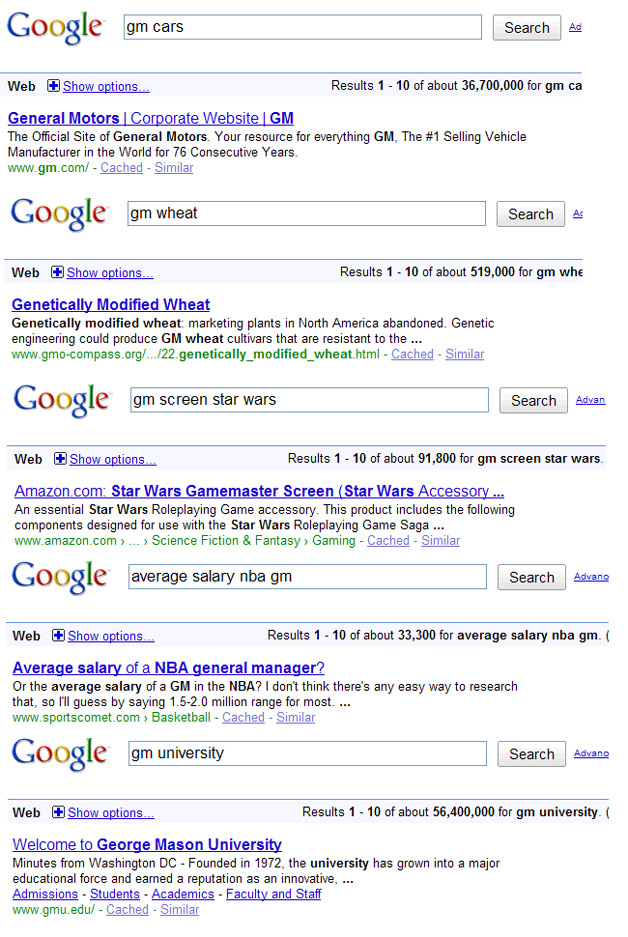

7. Some words or initials can have tons of different meanings, and Google uses other words in the query to help determine the correct ones. For example, there are over 20 possible meanings for the term "GM" that Google’s system knows something about.

8. Google includes variants on terms (such as singular and plural versions) within its "umbrella of synonyms".

9. Google still makes mistakes with synonyms.

10. You can turn off a synonym in a search by adding a "+" before the term or by putting the words in quotation marks.

Google wants feedback on algorithm mistakes. They’ll take it through the web search help center forum, or through a Twitter hashtag: #googlesyns.

It will be interesting to see how far Google progresses in the area of natural language search, because Baker is absolutely right in that it is a key to providing more relevant results. If they can understand exactly what we want from our language, without us having to tweak it too much, that will be a tremendous stride for search. Instead of us trying to figure out what Google wants us to say, Google would just understand what we say. Luckily people have gotten much better at searching over the years, learning to enter longer, more specific queries.

Related Articles:

> Google Launches Social Search Experiment

> Optimizing for Mixed Media Search Results

> Succeeding In SEO Requires Change